In the early days of my career, I’ve had a hard time differentiating reverse vs forward proxies and web servers vs load balancers, even after going through many articles from the internet. I thought I will write about the same in plain English so that it will help people understand them.

This article is a part of the System Architecture series. Check my article on Introduction to System Architecture Design to learn the basics where I will walk you through scaling an imaginary startup.

Suit yourself, let’s dive right in. These are the topics we are going to see today.

- (Forward) Proxy vs Reverse Proxy

- Application server vs Webserver

- Reverse Proxy as Load Balancer

- Policies and health checks

- Types of load balancers

(Forward) Proxy vs Reverse Proxy

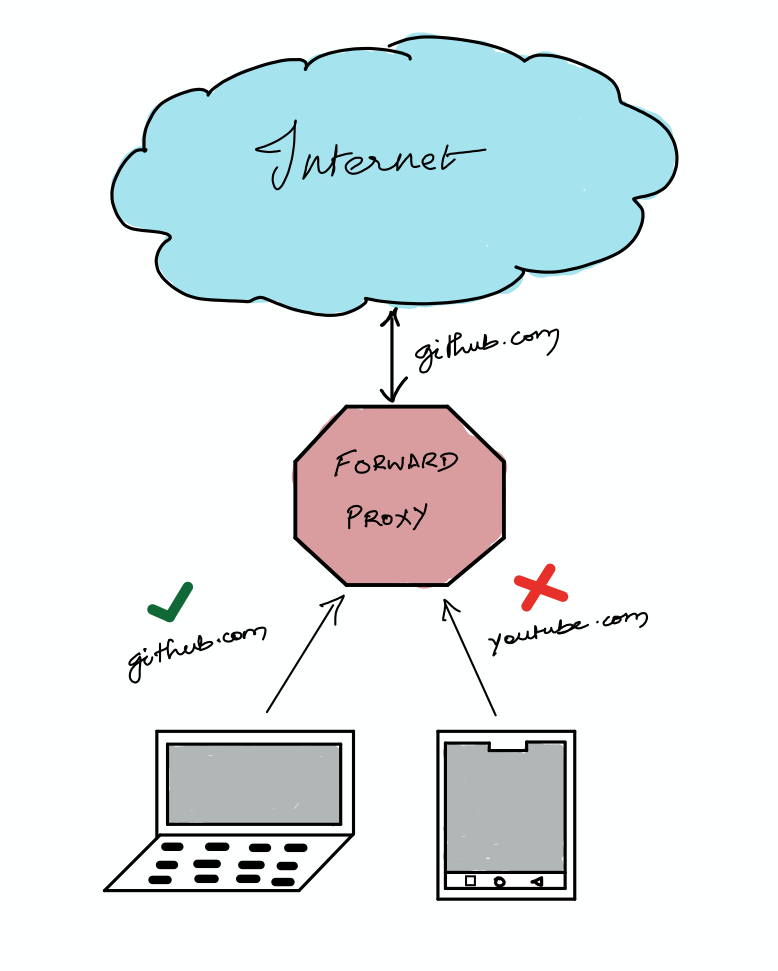

A proxy is a server or a software sitting in between the internet and the client that intercepts the requests and makes decisions based on that.

There are two types: Forward Proxy sometimes simply called Proxies and Reverse Proxies.

Forward Proxies sits on the client-side and it intercepts the requests going from the client to the internet. Here the client can be your chrome browser or mobile phone. A simple example of a forward proxy is the server that restricts social media websites in schools or corporate offices.

When you are in certain corporate wifi networks they will block you from accessing social media websites like youtube, twitter, or Reddit and of course all NSFW contents. This is done by a forward proxy server. Apart from interpreting the outgoing request, it can also be used for rate limiting and logging the visited websites.

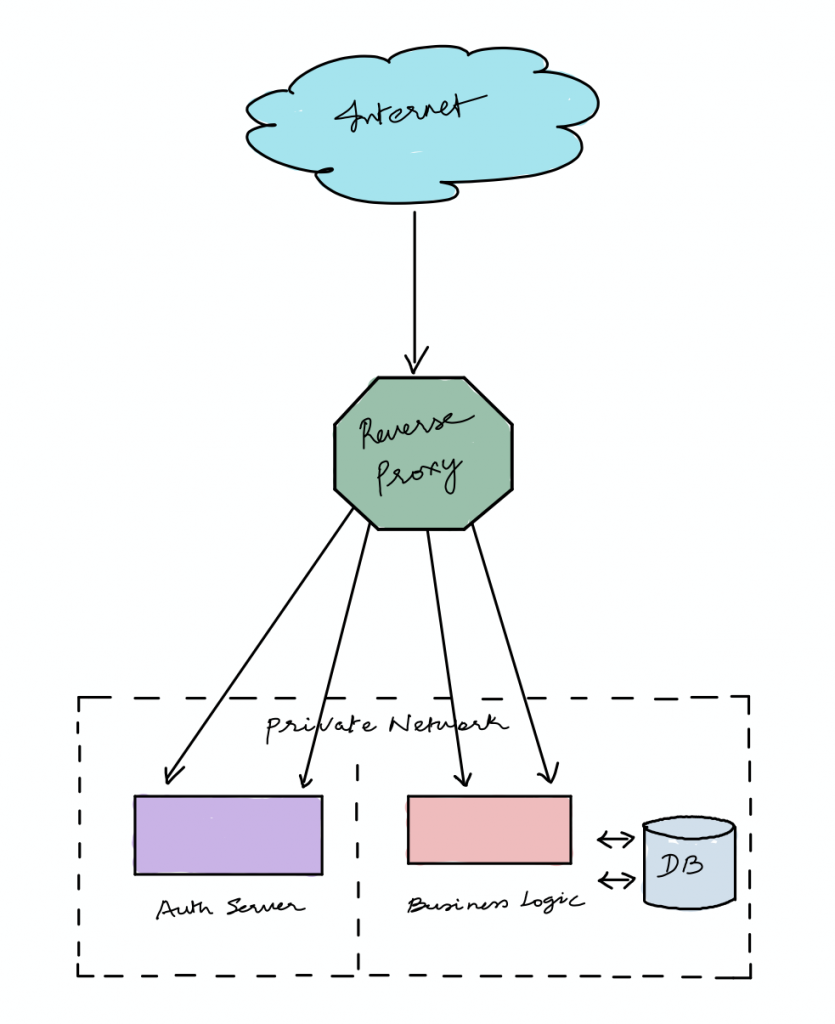

Whereas a reverse proxy is something which is done on the server-side. Using reverse proxy server we intercept the incoming requests and route them accordingly to the servers.

For instance, if we have two servers for our web app, one to handle the authentication part and other to handle the business logic by interacting with the database, we cannot expose those two servers to the internet.

One primary reason is security. You cannot expose the endpoints of all the servers you are running in the backend.

Another reason backed by common sense is you cannot let the frontend decide which endpoint to hit based on the business logic decisions. All frontend will know is one endpoint and that endpoint takes care of routing requests to backend servers.

This is why we use reverse proxies, to route requests to the backend servers. In our day to day production systems, we use web servers to achieve reverse proxying.

Application Server vs Web Server

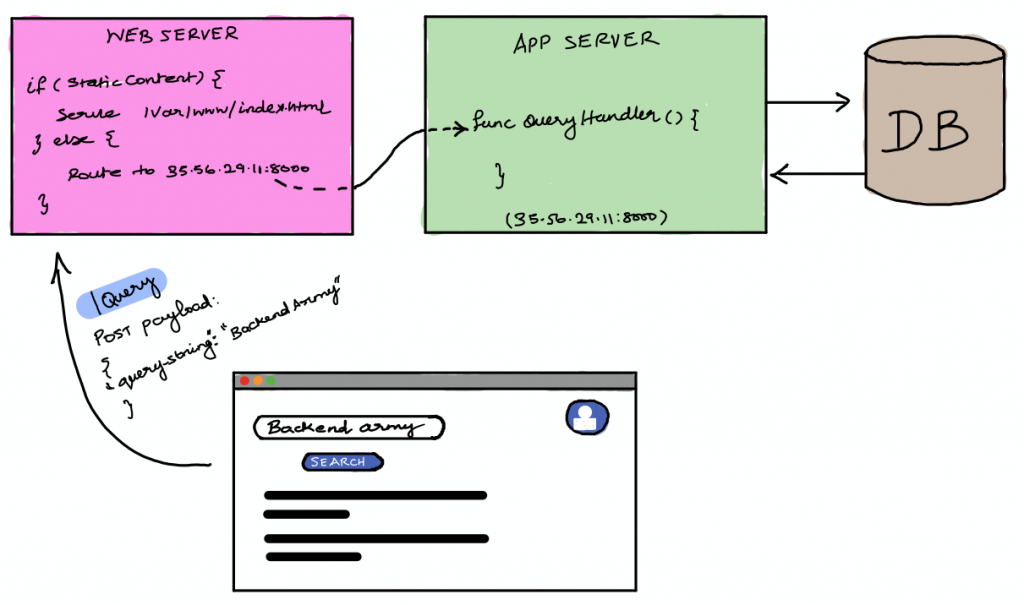

Web server takes care of serving the static contents of the website.

Application server takes care of the dynamic content and the business logic.

But sometimes these jargons are used interchangeably. Let’s say you have implemented a search engine that queries the database and displays them in a beautiful web page.

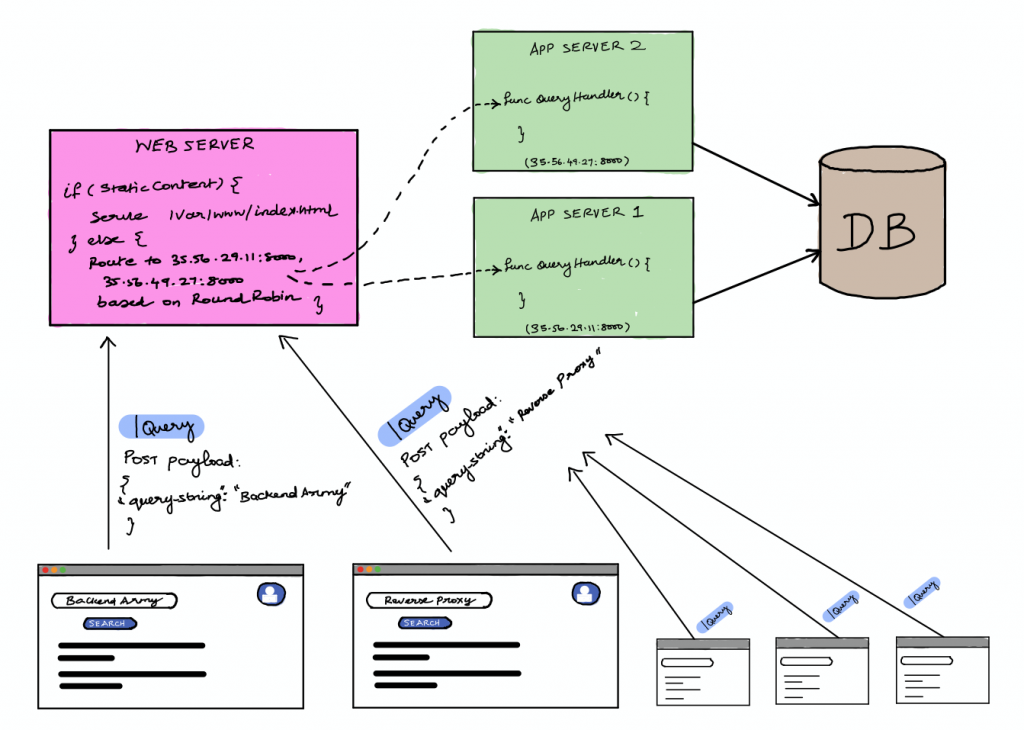

Now you will deploy the static assets and the application server behind the webserver. The web server takes care of serving the static assets like the HTML/CSS files and images, as well as routes the APIs to the application server that deals with the business logic.

But modern web frameworks like Django, Flask, or even net/http in Golang have lightweight built-in web servers that is used for development. Also, you have to notice that you should never use them in production as they are mostly single-threaded and doesn’t scale.

Always go for a robust and battle-tested web server like Nginx, Apache or CaddyServer for production.

Reverse Proxy as Load Balancer

As we saw, a reverse proxy is used to route the requests to different servers to serve different business logic while maintaining a single public-facing endpoint. One other functionality of reverse proxy is load balancing.

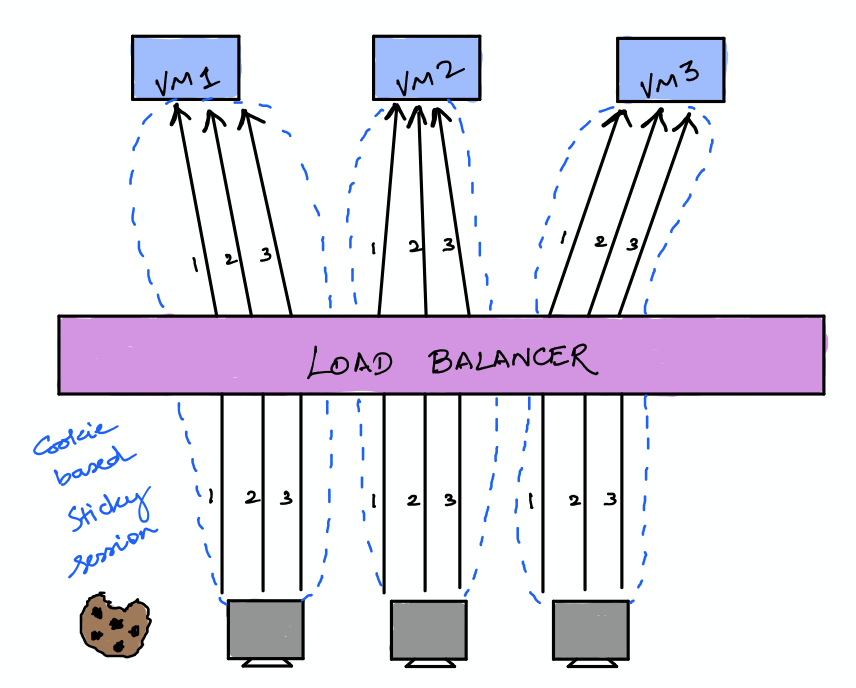

In previous architecture, we had only one app server serving the dynamic content of webpages, mostly APIs by retrieving data from the database. But what if we need to scale our app servers to handle more traffic. In this case, we need to distribute the incoming load across the multiple app servers.

If we configure our web server with the IP address of different VMs and tell it how to distribute the load across the VMs, our web server now will act as a load balancer.

Now our webserver distributes the incoming traffic to both of the app servers(VMs). Okay, on what basis it is distributing the load? To answer this question you need to know more about load balancing policies.

Policies and Health checks

Load balancing policies are nothing but the algorithms used by the load balancers to distribute traffic across the servers.

The default policies of load balancer depend on the type of load balancer. Some load balancers use Round Robin algorithm, some use IP hashing, some just Randomly distributes traffic. Let us see some commonly used load balancing algorithms.

Random: As the name says, when this policy is used, the load balancer simply routes the traffic in a random manner.

Round Robin: Round Robin policy distributes the incoming traffic to all servers one by one without any priority.

Least Connections: Gives priority to the server which is handling least concurrent connections at the given time. The server which has the least connection open is the one which is less busy with more resources available, traffic is routed here.

Least Latency: The backend server is selected based on the least response time. Mostly here an OPTIONS call is made and the server which responds first will receive the original traffic from the load balancer.

IP hashing: Here the traffic is distributed based on the source IP’s hash. Each server behind the load balancer will be mapped to different source IP hashes, traffic from the source will be routed to a specific server based on the mapping.

URL hashing: Similar to IP hashing except the hashing is done with URL. So all the traffic from a given URL is routed to a specific server. This is useful to maintain and serve the cached contents as the contents will be requested from the same server for the URL.

Sticky Sessions: Some of the traffic needs to maintain a state. For example, if a user logs in, they need to be connected to a single server in the backend to maintain a session. This is achieved by using sticky sessions, here the load balancer maintains a cookie-based affinity with the client to maintain the state of the session and serve the consecutive requests from the same server.

But this policy is generally not recommended because the whole point of load balancing is distributing the traffic, if you are forced to use this policy, you might need to check your architecture.

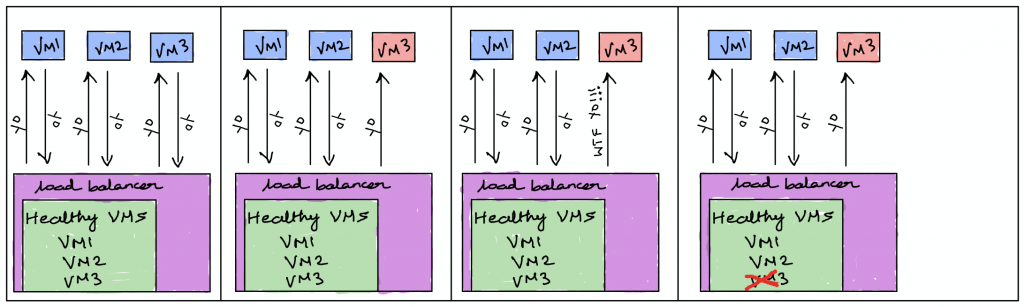

In order to make sure the load balancer does not route the traffic to an unresponsive VM, it constantly pings the servers in the backend to make sure they are healthy. If any one of the servers did not respond to the health probes, the load balancer will assume that server is dead and it won’t send traffic to that server any longer.

Even though the server didn’t respond, as long as it is registered, the load balancer keeps pinging the server until it comes back up.

Types of load balancers

Before going into the different types of load balancers. You should know that most of the load balancers we use are software load balancer, we achieve it by installing software like Nginx, HAproxy.

There are hardware load balancers available from companies like Cisco. These days the difference is subtle, you get more single chassis power in a hardware load balancer whereas in software load balancer horizontal scaling is easy.

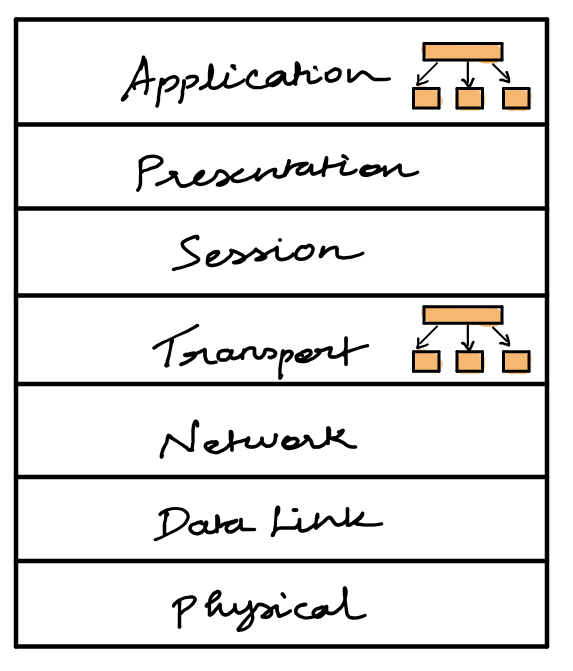

The load balancer works based on the OSI layers. So the layer on which the load balancer sits on and routes the traffic defines its type. Basically there are two major types of load balancers. Layer 4 load balancer and layer 7 load balancer.

Layer 4 makes routing decisions on the transport layer(TCP). They simply take a look at the TCP stream and forwards the packets to the backend servers. Layer 4 load balancers won’t have access to the HTTP packets.

Whereas layer 7 load balancer makes the routing decisions on the application layer(HTTP). Layer 7 load balancers are more flexible as it has access to the HTTP packets. You can route the incoming traffic based on the content of the HTTP packets, like header or payload. They are famously called ALBs in Amazon Web Services.

Going further

You can play around with reverse proxies by setting up webserver as load balancers in your local. If you want to try to set up a load balancer locally, I used to recommend Nginx but now I cannot stop suggesting CaddyServer. You can download a single binary from Caddy’s website and set a load balancer in your local with different policies.

You can signup with any cloud provider like Google Cloud Platform, Amazon Web Services, or Digital Ocean. There you can play around by spinning up VMs and putting them behind a load balancer.

In upcoming posts, I will show you how to set up reverse proxy as a load balancer in local and route traffic to different endpoints. Keep in touch with me on twitter to get notified when I publish them. I hope you learned a thing or two. Let me know your thoughts in the comments below.

I like the stream of thoughts and putting the different pieces together, like a story. Keep up the good work !!!